Features a revolutionary solver for processing motion capture data in real-time. Recordings can be further refined using the editor and post-processor. Apply animation data directly to character assets using the character engine. Plugins and pipelines are there to complete the motion capture workflow.

ONE-TIME FEE

$1,799

Studio is user-friendly while providing many advanced features. It is very simple to connect a system and record your first take. Users have the option to master more advanced features such as editing, retargeting and post-processing.

Locomotion is the most important aspect of inertial motion capture, often requiring the most editing in post-production. Studio solves firm foot placements, realistic ground plane collisions, compensates foot sliding, processes jumps and height tracking.

Positional drift is a natural limitation of inertial motion capture systems when not using hybrid trackers for absolute positioning. Studio features a displacement editor aimed at correcting positional drift on flat floors and when height tracking.

Studio can export data as FBX, BVH, C3D, ASF, AMC and CSV files. Data is automatically saved in our proprietary raw format for backup and further processing. The skeletal naming convention is compatible with most software applications.

Studio broadcasts real-time data to a variety of animation software applications and game engines. You can broadcast motion capture data to Unreal, Unity, Maya, MotionBuilder and Blender or implement the streaming protocol to develop custom integrations.

Studio uses timecode to synchronize motion capture data. External timecode generators can be used to synchronize data with other systems such as optical stages and third-party face tracking solutions. UDP triggers can be used to control recordings.

By combining kinematics with simulated physics and accelerometer-based positioning, Studio uses a powerful real-time locomotion solver to determine the overall displacement and feet placements of the skeleton during walking, jumping, running and sitting. Feet placements and floor interactions are further refined using colliders.

Studio features a powerful finger solver that corrects hand data in real-time. The solver will intelligently determine that certain hand poses are unnatural and correct them. Each digit calibrates dynamically to compensate for the sensors moving inside the glove and for magnetic interference.

Studio can track faces in real-time. The introduction of face tracking allows users to accomplish full performance captures without having to synchronize multiple systems. Face data consists of blendshapes and bones, which integrate seamlessly with the body and fingers. Face data can be retargeted to character assets in real-time.

Studio allows you to create custom skeletons, which match the posture and proportions of the actor, by using the skeleton generator or the skeleton editor. The skeleton generator creates a skeleton file based on the actor’s measurements. The skeleton editor requires reference images of the actor and manual editing to create a more accurate skeleton. Accurate skeletons improve the overall accuracy of the capture.

You can load 3D models as static, dynamic or tracked props. Static props are motionless environment objects that help you direct animation narratives. Dynamic props are hand-held objects driven by additional sensors such as tools or weapons. Tracked props are driven by external trackers, which benefit from absolute positioning.

Studio can create virtual systems that emulate prerecorded data. You can take raw recordings and process them later with different calibrations and settings. Virtual systems are ideal for developing pipelines and plugins without having an actor wearing the system. Virtual systems are also used to process logged data.

Studio features tools for virtual production that use absolute positioning and external trackers. The software has multiple hybrid modes for fusion between positional and inertial data. Trackers can be used to drive props or override skeletal displacement. You can create and animate virtual production cameras to film your animation.

| Software (Minimum) | |

|---|---|

| Operating System | Windows 7, 8, 10, 11 (64-bit) |

| CPU | Dual-Core Processor |

| RAM | 4GB |

| GPU | Dedicated Graphics Card |

| HDD | 1GB |

| Other | OpenGL 3.2 (or newer) |

| Software (Recommended) | |

|---|---|

| Operating System | Windows 10, 11 (64-bit) |

| CPU | Quad-Core Processor |

| RAM | 16GB |

| GPU | GTX 1060 (or better) |

| HDD | 1GB |

| Other | OpenGL 3.2 (or newer) |

QuickIntelligentSemi-Automated

Produce spectacular animations quickly!

The editor and post-processor will improve motion capture recordings using advanced full-body inverse kinematics and intelligent solvers. Produce game-ready animations with minimal effort.

Locomotion is the most important aspect of inertial motion capture, often requiring the most editing in post-production. Foot tags can be edited manually before running the post-processor to correct locomotion.

How the skeleton interacts with the floor is key in producing realistic animations. The post-processor uses our physics engine and collision detection to produce realistic floor interactions.

Positional drift is a natural limitation of inertial motion capture systems when not using hybrid trackers for absolute positioning. The displacement editor can be used to correct positional drift.

Hybrid trackers can be used to override skeletal displacement with absolute positioning. The post-processor can then be used to create firm foot placements and realistic ground interactions.

The post-processor makes corrections to the original data without producing glitches such as kinematic pops or vibrations. The take quality of the take is improved without excessive filtering.

Filters are optional and can be applied to the data after processing. You can smooth out entire takes and remove unwanted details to produce perfect looking character animations.

This innovative high-level approach to cleaning motion capture data is both simple and intuitive. The real-time solver produces tags, which are high-level properties of the recording. The editor can be used to manipulate tags and the result is sent to the post-processor for automated data cleaning.

Stabilizes each foot to produce accurate foot placements and can be used to modify overall locomotion. Toe deformations are recalculated through ground collision detection.

Locks each hand into a fixed state and deforms the skeleton’s upper body to ensure the palm reaches its target.

Defines whether the weight of the skeleton is supported by its root to stabilize the hips when sitting down on the floor plane or on a chair.

Creates a simulated jump using the physics engine based on duration, trajectory and take-off speed. The jump parabola can be fine-tuned in the jump editor.

Defines whether the skeleton is walking on a flat surface and is used to flatten vertical drift when height tracking.

In the following video we edit a simple footstep where the foot detaches from the ground prematurely. We extend the end of the tag to change the duration that the foot is attached to the floor. The post-processor automatically corrects foot placements throughout the take.

Hands interacting with the floor will produce hand tags, which are then used to process stable hand placements. Hand tags are useful for gestures involving hand-floor interactions, walking on all fours, doing pushups, gripping or pushing against the stationary environment.

When height tracking, the system detects each footstep based on body accelerations and intelligent gesture detection. The post-processor is used to correct the data, processing floor planes and overcoming positional drift while retaining firm foot placements. Hybrid tracking with displacement override is also available.

Jumps are moments when the skeleton becomes airborne as both feet detach from the ground simultaneously. While they are simulated in real-time, these moments are also marked by the software as jump tags. Jump tags can be added, modified or removed before post-processing the animation.

Sitting on the floor or on a calibrated chair will produce hip tags, which are then used to process stable hip placements. Chairs can be calibrated in real-time to simulate the actor sitting on an elevated surface. Hip tags and feet tags will further refine how the body interacts with the ground and perfect the transition from sitting to standing.

In inertial motion capture, locomotion is primarily driven by one bone that is supporting the weight of the skeleton. Humans distribute their weight between both feet. Locking is the process through which both feet lock to the ground to support the distributed weight of the skeleton. This video showcases the differences between no locking, real-time locking and post-processing the locked feet.

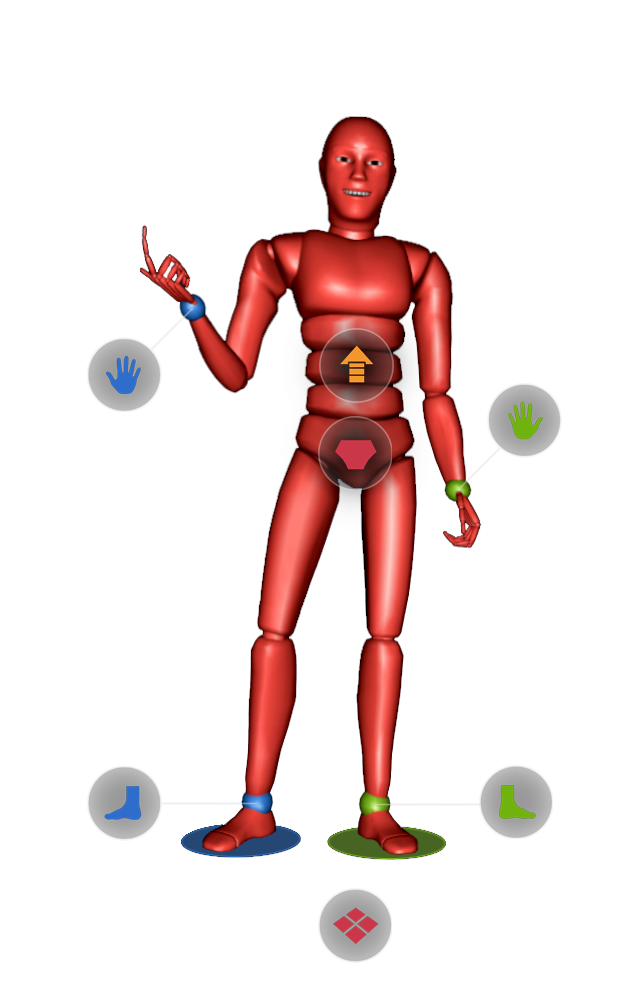

MapPoseRetarget

Retarget animations directly to characters.

Applying motion capture data to characters is an important step in character animation. The character engine allows you to import, pose, retarget and animate humanoid assets.

The 3D engine supports textures, shaders, skin deformers and blendshapes. You can import characters, environments and other textured 3D models. While these features are common in game engines and animation software, we optimized them for motion capture so that you can animate characters with zero latency.

Automatically create skeleton mapping templates and apply them to connect source skeletons to target character assets. The mapper can traverse skeletons and intelligently connect joints to their corresponding body parts. While the mapper is fully automated, you can manually modify and save templates for future use.

In addition to bones, most character models use blendshapes to achieve individual face expressions. The reshaper analyzes your character's blendshape channels and automatically generates a face map. Face maps establish connections between the source and target blendshape channels, which can be edited manually or saved for future use.

A mask is an intermediary animation layer used to improve the overall result of face tracking. The masker is used to control the face animation by adjusting individual blendshape channels, calibrating neutral faces and fine-tuning face bone orientations. The masker features a testing mode for validating the mapping of the face when retargeting data to a character asset.

Whether your character is a photorealistic human or a cartoon animal, the retargeter will intelligently translate body, fingers and face data to your target. Important properties are recalculated using our proprietary rig including firm foot placement, articulation of the spine, arm positions, fingers and thumb orientations and face expressions.

Posing is a key aspect of retargeting and often a time-consuming process for the user. The animator must reorient each bone so that the character pose matches the pose of the source skeleton. Manual posing leads to inaccuracies in the binding of the two skeletons. The poser will automatically adjust your character to create the perfect binding.

While the automated retargeting process of the character engine will produce good animations, users have the option to control several properties of the body including arm positions, feet positions, upper body slouching, shoulder shrugging and floor offsets. Floor offsets control whether the character is walking on the bones or on the polygons of the mesh.

You can broadcast retargeted animations from the character engine to game engines. Broadcasting retargeted data allows you to one-to-one map animations at the plugin level, making it convenient to animate characters in game engines. The character engine is optimized for real-time motion capture and does not add latency to the animation pipeline.

The retargeter can be fully automated by running retarget sequences, which automatically map, pose and bind motion capture data to character assets. You can manually control every step of the retargeting process and make quick adjustments to the bind pose. For example, you can distance the hands away from the pelvis, bend the knees, adjust the slouch and relax the shoulders.

By combining face, body and fingers data, at the mannequin level, you can now record full performances. Full performances can be retargeted directly to character assets using the character engine. Full performances can also be broadcasted to game engines and other third-party software applications in real-time.

Please contact us with questions, to schedule a live demo or to request a quote at info@nansense.com.