Home

Suits

Gloves

Face

Software

Editor

Mobile

Pricing

Services

About

F.A.Q.

Contact

Inertial systems have limitations that are time consuming to solve in conventional animation software. The purpose of our editor post-processor is to overcome those limitations with minimal effort, intelligently and semi-automatically. Our goal is to enable animators to correct takes in minutes. Knowing that you can fix anything is a game changer.

HOW IT WORKS

REQUIREMENTS

EXAMPLES

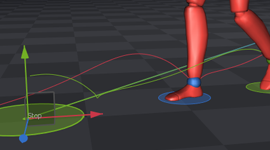

Locomotion is an important aspect of inertial motion capture. Foot placements define how the skeleton moves forward, which can be edited to correct the overall displacement of the take.

How the skeleton interacts with the floor is key in producing realistic animations. The post-processor uses our physics engine and collision detection to produce realistic floor interactions.

Positional drift is a natural limitation of inertial motion capture systems when not using hybrid trackers for absoulte positioning. The displacement editor can be used to correct positional drift quickly.

Hybrid trackers can be used to override skeletal displacement with absolute positioning. The post-processor can then be used to create firm foot placements and realistic ground interactions.

The post-processor makes corrections to the original data without producing glitches and errors such as IK pops or vibrations. The take is cleaned without filtering or smoothing important data.

Filters are optional and can be applied to the data after processing. You can smooth out entire takes and remove unwanted details to produce perfect looking character animations.

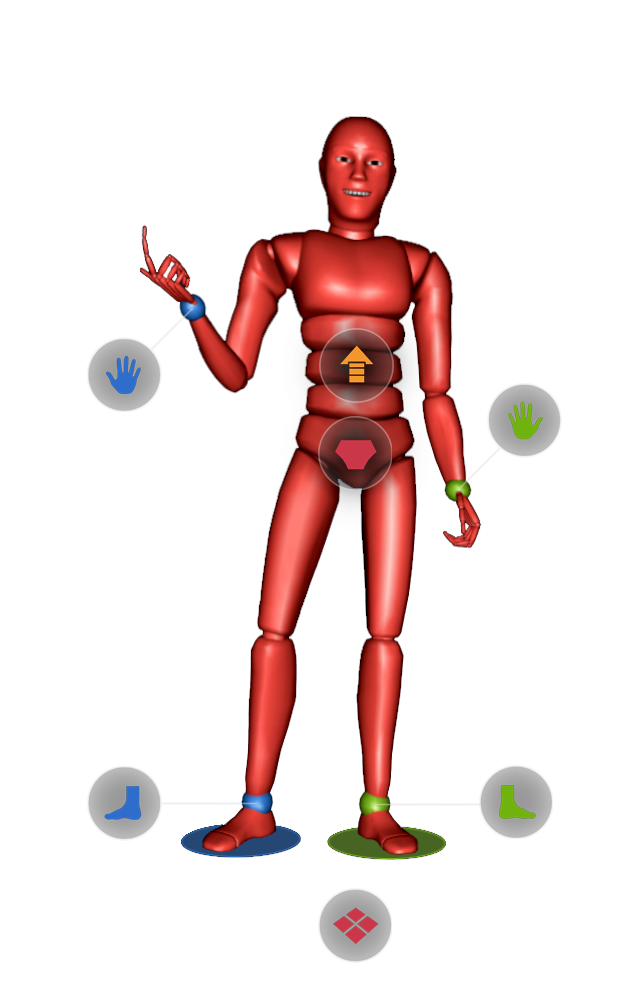

The editor provides tools for manipulating and batch editing tags that affect locomotion, foot placement, positional drift, floor collisions and jumps. Our goal has been to make the editor simple to use. You only edit the end points of each limb and the root of the skeleton. The remainder of the body is calculated by the post-processor using inverse kinematics, physics and advanced solvers.

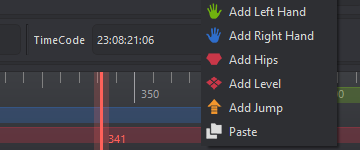

Stabilizes each foot to produce accurate foot placements and can be used to modify overall locomotion. Toe deformations are recalculated through ground collision detection.

Locks each hand into a fixed state and deforms the skeleton’s upper body to ensure the palm reaches its target.

Defines whether the weight of the skeleton is supported by its root to stabilize the hips when sitting down on the floor plane or on a chair.

Creates a simulated jump using the physics engine based on duration, trajectory and take-off speed. The jump parabola can be fine-tuned in the jump editor.

Defines whether the skeleton is walking on a flat surface and is used to flatten vertical drift when height tracking.

Read more about our full-body motion capture suit configurations, features and specs.

Read more about our motion capture glove configurations, features and specs.

In addition to body and fingers, our software enables you to do face motion capture.

Read more about our motion capture software solutions and character animation pipelines.

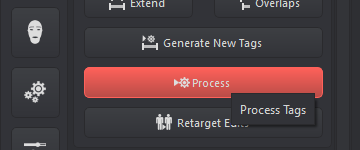

This innovative high-level approach to cleaning motion capture data is both simple and intuitive. The real-time solver produces tags, which are high-level properties of the recording. The editor can be used to manipulate tags and the result is sent to the post-processor for automated data cleaning. You can now produce spectacular motion capture animations very quickly.

As the real-time solver computes motion capture data, it detects and tags important properties of the animation such as footsteps, floor planes, hand placements, hip placements and jumps. Tags correspond to the behavior of each limb and the root of the skeleton.

The editor tools can be used by animators to modify tags. There are automated tools for rapidly batch processing and simplifying tags. This an optional step and is not always necessary, but it provides the animator with full control over how the data is going to be processed.

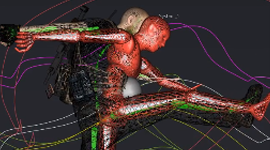

The edited tags are sent to the post-processor. The post-processor uses advanced full-body inverse kinematics and intelligent solvers to clean the take. The post-processor avoids aggressive smoothing and maintains as much of the original data as possible.

The simplest form of editing is to improve foot placements and locomotion. In the following video we edit a simple footstep where the foot detaches from the ground prematurely. We extend the end of the tag to change the duration that the foot is attached to the floor. The post-processor will automatically correct foot placements throughout the take based on tags.

A looping treadmill animation is an animation where the beginning and end are blended together seamlessly. These animations are often used in video games to animate the character you are controlling. The following video showcases how easy it is to generate a looping treadmill animation. We record a simple walk cycle, which will be the basis for our animation, and crop a segment of the take to process.

| Software (Minimum) | |

|---|---|

| Operating System | Windows 7, 8, 10, 11 (64-bit) |

| CPU | Dual-Core Processor |

| RAM | 4GB |

| GPU | Dedicated Graphics Card |

| HDD | 1GB |

| Other | OpenGL 3.2 (or newer) |

| Software (Recommended) | |

|---|---|

| Operating System | Windows 10, 11 (64-bit) |

| CPU | Quad-Core Processor |

| RAM | 16GB |

| GPU | GTX 1060 (or better) |

| HDD | 1GB |

| Other | OpenGL 3.2 (or newer) |

Use additional tools and pipelines to modify and visualize motion capture data as it is being edited.

The displacement editor is used to control the skeleton’s position on stage to combat positional drift. The post-processor will recalculate each individual step throughout the take to ensure clear footsteps.

The jump editor is used to control the skeleton’s position on stage when airborne. Each jump’s trajectory and duration can be modified manually in the viewport to ensure accurate takeoff and landing.

The ability to visualize motion capture data applied to the target character is important when editing your animation. Data can be pushed to the character engine where it is retargeted as it is being edited.

Data from the character engine can be sent directly to the game engine so that you can inspect the result as it is being edited. This is achieved using the broadcaster and our plugin library.

The following examples illustrate some of the more challenging scenarios you will experience when capturing inertial motion capture data. The videos showcase data produced by the real-time solver in blue alongside the post-processed result in red.

Hands interacting with the floor will produce hand tags, which are then used to process stable hand placements. Hand tags are useful for gestures involving hand-floor interactions, walking on all fours, doing pushups, gripping or pushing against the stationary environment. Walking like a quadruped with firm hand placements is now possible through post-processing.

When height tracking in pure inertial mode, the hardware and the software work together to detect each footstep based on a mixture of body accelerations and intelligent gesture detection. It is very quick to correct the data, process floor planes and overcome positional drift while retaining convincing foot placements. Hybrid tracking with displacement override modes is also available.

Jumps are moments when the skeleton becomes airborne as both feet detach from the ground plane simultaneously. While they are simulated in real-time, these moments are also marked by the software as jump tags. Jump tags can be added, modified or removed before post-processing the animation. Animators have the option to fine tune each jump’s trajectory for more realistic results.

Sitting on the floor or on a calibrated chair will produce hip tags, which are then used to process stable hip placements. Chairs can be calibrated in real-time to simulate the actor sitting on an elevated surface. Hip tags and feet tags will further refine how the body interacts with the ground and perfect the transition from sitting to standing.

In inertial motion capture, locomotion is primarily driven by one bone that is supporting the weight of the skeleton. In reality, humans distribute their weight between both feet. Locking is the process through which both feet lock to the ground to support the distributed weight of the skeleton. This video showcases the differences between no locking, real-time locking and post-processing the locked feet.